As cloud costs rise, hybrid solutions are redefining the path to scaling AI

Deloitte research reveals how rising cloud costs are prompting organizations to blend legacy systems with emerging solutions to scale AI more efficiently

As organizations use artificial intelligence more, they’re facing a critical challenge: scaling their computing infrastructure to keep up with growing use, while also controlling rising costs. The stakes are high. Without the right strategy, companies risk spiraling expenses and slower innovation.

That’s why many leaders aren’t waiting for budgets to break. They’re proactively reassessing where and how they run their AI workloads. To understand this issue, Deloitte surveyed 60 executives from data centers and asked how they and their clients are planning for the next wave of AI-driven computing.

Costs are a big concern. When organizations launch AI projects, they often turn to public cloud computing platforms to give them the computing power they need—fast and without requiring a big upfront investment in hardware.

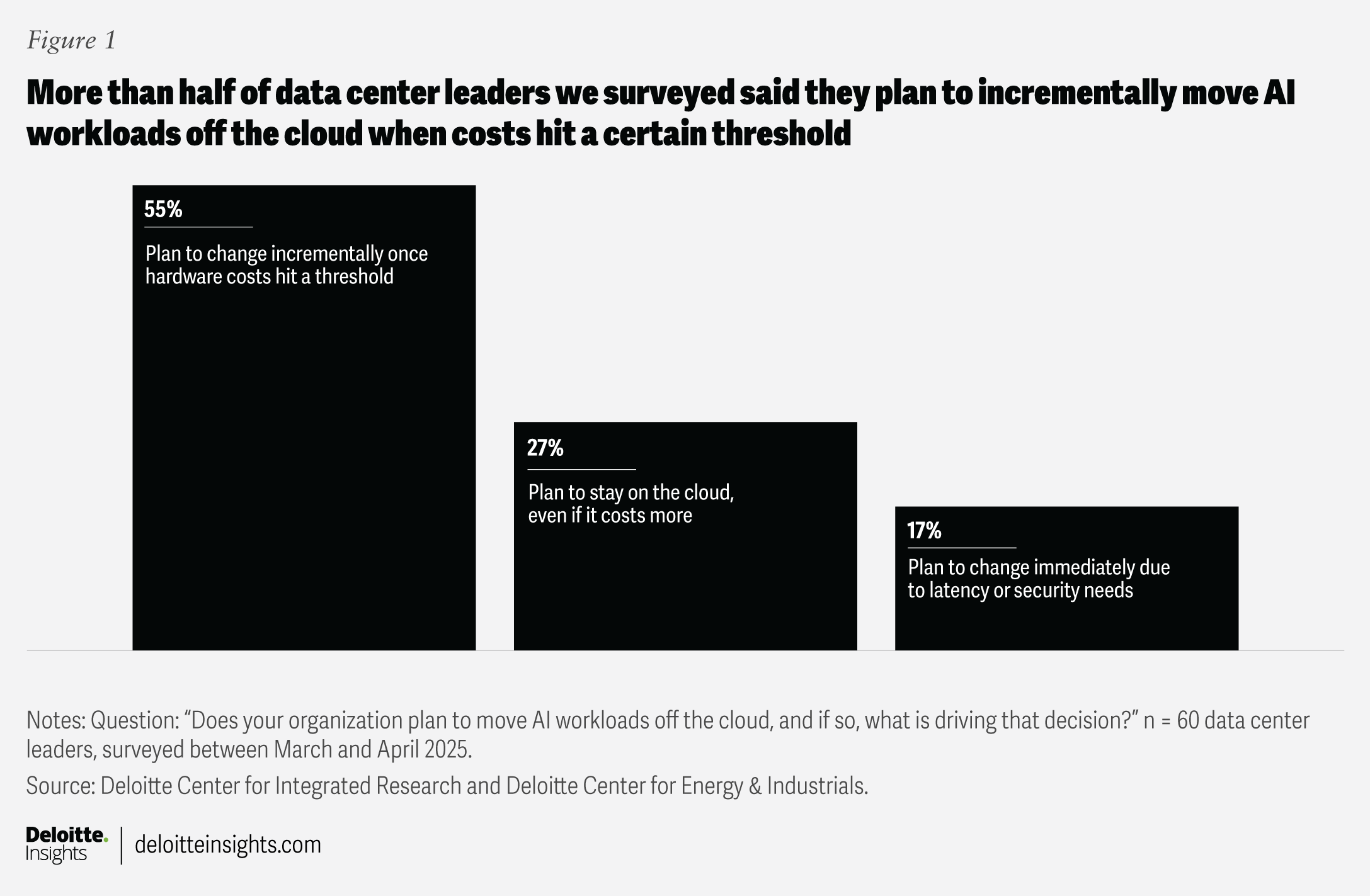

But as AI usage scales, these cloud costs can balloon fast. More than half of the data center leaders we surveyed say they plan to incrementally move AI workloads off the cloud when their data-hosting and computing costs hit a certain threshold (figure 1).

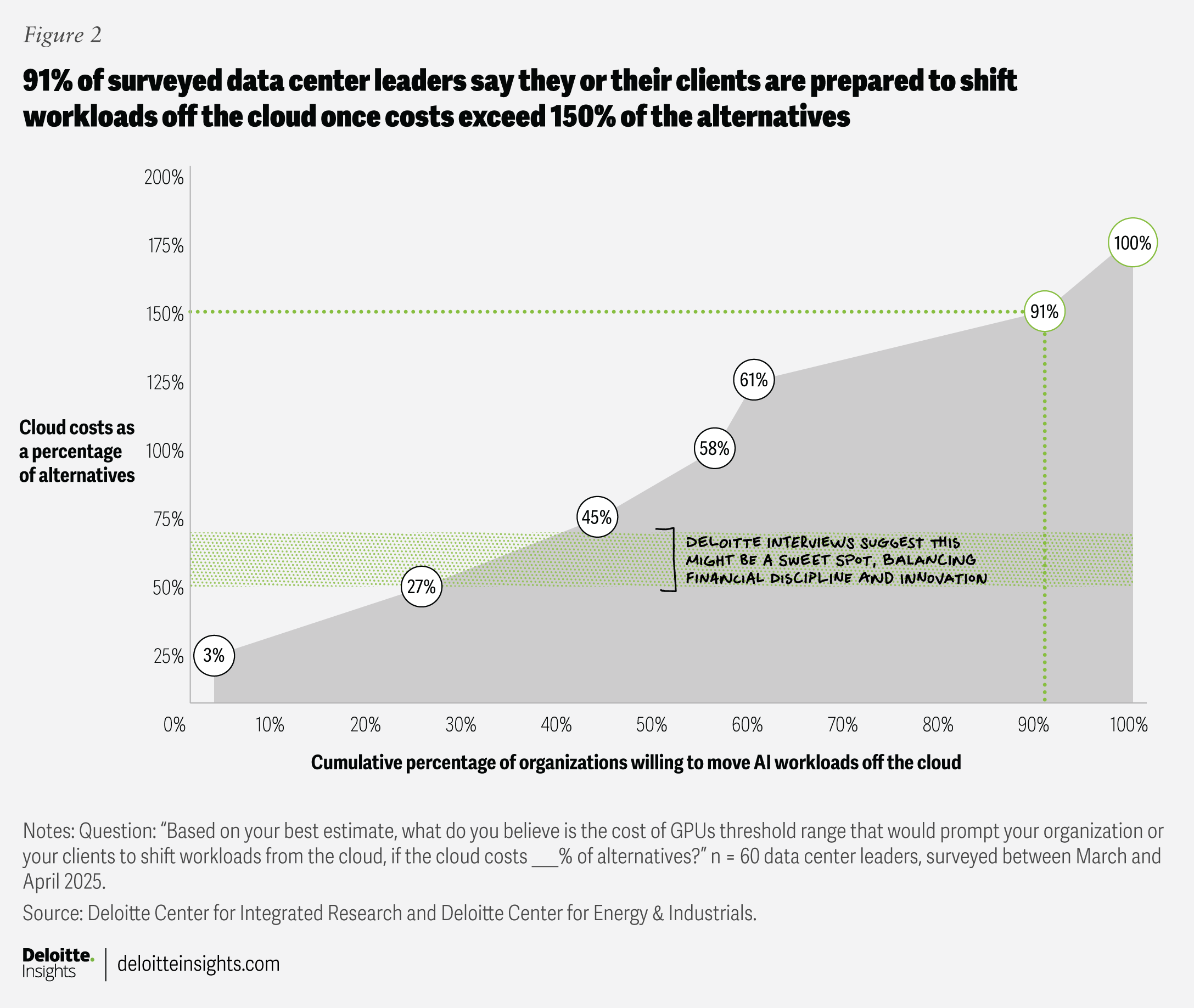

What exactly is that threshold? It depends on the organization, of course. The data center leaders in our survey manage facilities serving multiple client organizations—from large enterprises to smaller companies leasing shared space—so the decision can affect a broad range of businesses. Roughly a quarter of the respondents say they or their clients are ready to make the move as soon as cloud costs reach just 26% to 50% of alternatives, showing high sensitivity to even modest price changes. At a higher cost threshold, most respondents indicate a firm limit: As soon as cloud expenses exceed 150% of the cost of alternatives, 91% are prepared to shift workloads elsewhere (figure 2).

Previous Deloitte research, based on interviews with more than 60 global client technology leaders across industries, suggests many organizations may be waiting too long before making a move. For many organizations, the ideal threshold might be closer to when cloud costs hit around 60% to 70% of the alternatives. Financial discipline—such as avoiding long-term, fixed vendor agreements—can give organizations flexibility. That way, they can start in the cloud, closely monitor costs, and avoid getting locked into spending that can quickly outpace alternatives. Businesses can keep innovating without letting costs spiral out of control.

With this realization, many organizations are now looking for smarter ways to handle their AI workloads. Hybrid models that blend public cloud services with a range of new alternatives are becoming increasingly attractive to the companies we surveyed.

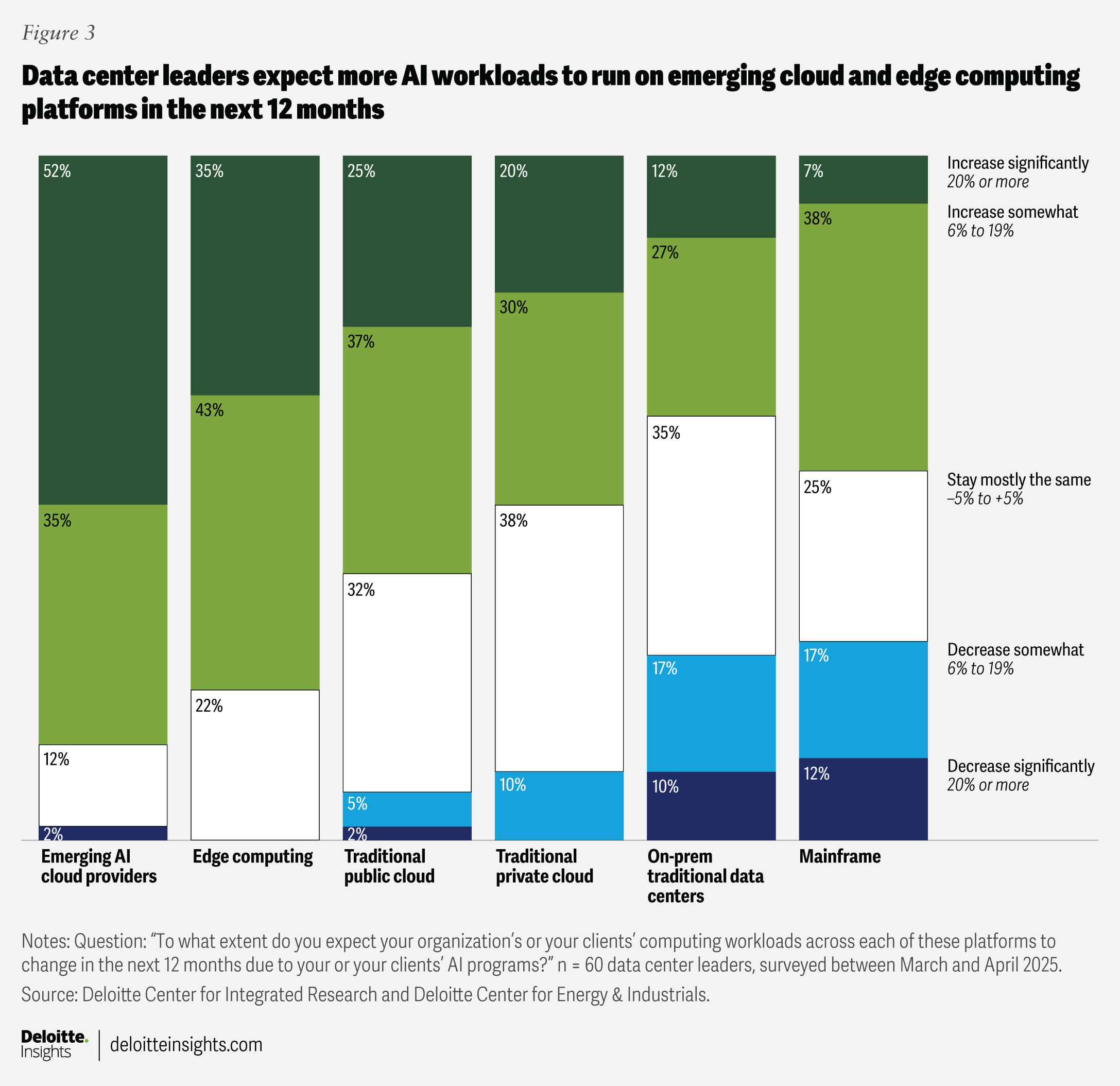

Leading the shift are emerging AI cloud providers—new, specialized services built just for AI that can be more cost effective than alternatives. Among the data center leaders in our survey, 87% say they or their clients plan to ramp up their use of emerging AI clouds in the year ahead (figure 3).

Not far behind is edge computing—that is, processing data right where it’s generated, whether on smart sensors, connected devices, or small servers located in the field, like a retail store or factory floor. As AI chips and smart devices become more advanced, organizations are increasingly turning to this option, rather than relying solely on centralized cloud infrastructure. In our survey, 78% of respondents say they anticipate their organizations or their clients will boost use of edge technology in the next 12 months.

This shift points to a growing effort to manage computing costs as AI scales up, with organizations looking to diversify beyond traditional public cloud and gain greater flexibility by balancing edge processing and emerging AI cloud platforms.

At the same time, many organizations are also reimagining their existing on-premises systems—servers and data centers managed within central company facilities—to better align with new AI demands. A major decision point there is balancing how quickly they need technical upgrades against the cost of investing in that transformation now or later. Whether they reconfigure, reactivate, recommission, or completely reimagine the data centers, it’s not a question of if they need to make changes, but when.

All in all, the research shows that as organizations expand their use of AI, they should consider a hybrid approach to computing—one that draws on the full range of modern technologies. By blending traditional systems with emerging cloud services and the ability to process data right where it’s created, organizations can better manage costs, speed, and security. Ultimately, finding the right mix of technologies can help companies keep costs in check and set themselves up to get the most out of AI in the years ahead.