From pilots to practice: Scaling AI use in federal health

Federal health agencies can harness AI to improve the efficiency of both health services and government operations

Mark Urbanczyk

Dr. Juergen Klenk

Alison Muckle Egizi

Joe Mariani

Ipshita Sinha

Federal health agencies stand on the cusp of leveraging artificial intelligence to markedly improve resource efficiency and accelerate innovation.1 AI innovation can make a big difference for federal health agencies by streamlining administrative processes and optimizing expenses, freeing up scientists to focus on breakthrough research instead of administrative tasks and enabling clinicians to spend more time with patients.2 The first drug developed using generative AI has completed Phase 1 human clinical trials.3 And a new AI model has the potential to address up to 17,000 diseases with insufficient treatment options by repurposing existing drugs for “off-label” use. This alone offers new hope to patients with rare diseases.4

While the potential value seems clear, federal health agencies face challenges with scale, with many AI-based efforts stuck in the pilot phase.5 Deloitte’s 2024 State of Generative AI in the Enterprise survey found that over two-thirds of surveyed organizations had transitioned less than a third of the AI initiatives into full-scale production.6

However, scaling AI is important if agencies are to achieve both efficiency and mission goals. Scaling AI could mean that everybody who could benefit from using an AI tool can do so. In some cases, that may mean enterprisewide implementation; in others, it could mean targeted implementation with just the team that performs a specialized function where the tool could generate maximum value. For instance, an agency might adopt an advanced AI diagnostic tool selectively, using it primarily in specialized clinical settings where it improves patient care. Yet, regardless of the approach, the foundation to support scalable AI should be solidly in place.

Having said that, leaders can’t simply flip a switch to accelerate AI integration. Federal health agencies face obstacles, including data-quality challenges, interoperability issues, insufficient resources, and security hurdles.7 But there’s a path forward for agencies to move beyond experimentation and create an environment to deploy AI-based initiatives at scale safely, securely, and effectively. By scaling AI strategically, federal health leaders can turn resource limitations into catalysts of innovation, potentially increasing the quality and efficiency of government services.

This article illustrates how AI at scale can improve job experience and performance for common federal health roles. We also outline five important capabilities to achieve AI scalability: data, ethics, infrastructure, workforce, and leadership. Success is about more than adopting new technology; it is about improving health outcomes, making smarter use of resources, and expanding operational capacity.

Envisioning AI at scale in federal health

To assess the value of using AI at scale for the health sector, we looked at four federal health agency roles—grants manager, scientist, HR coordinator, and clinician—and envisioned what these roles could look like with fully functional AI-based technologies integrated into daily work.

1. The government grants manager

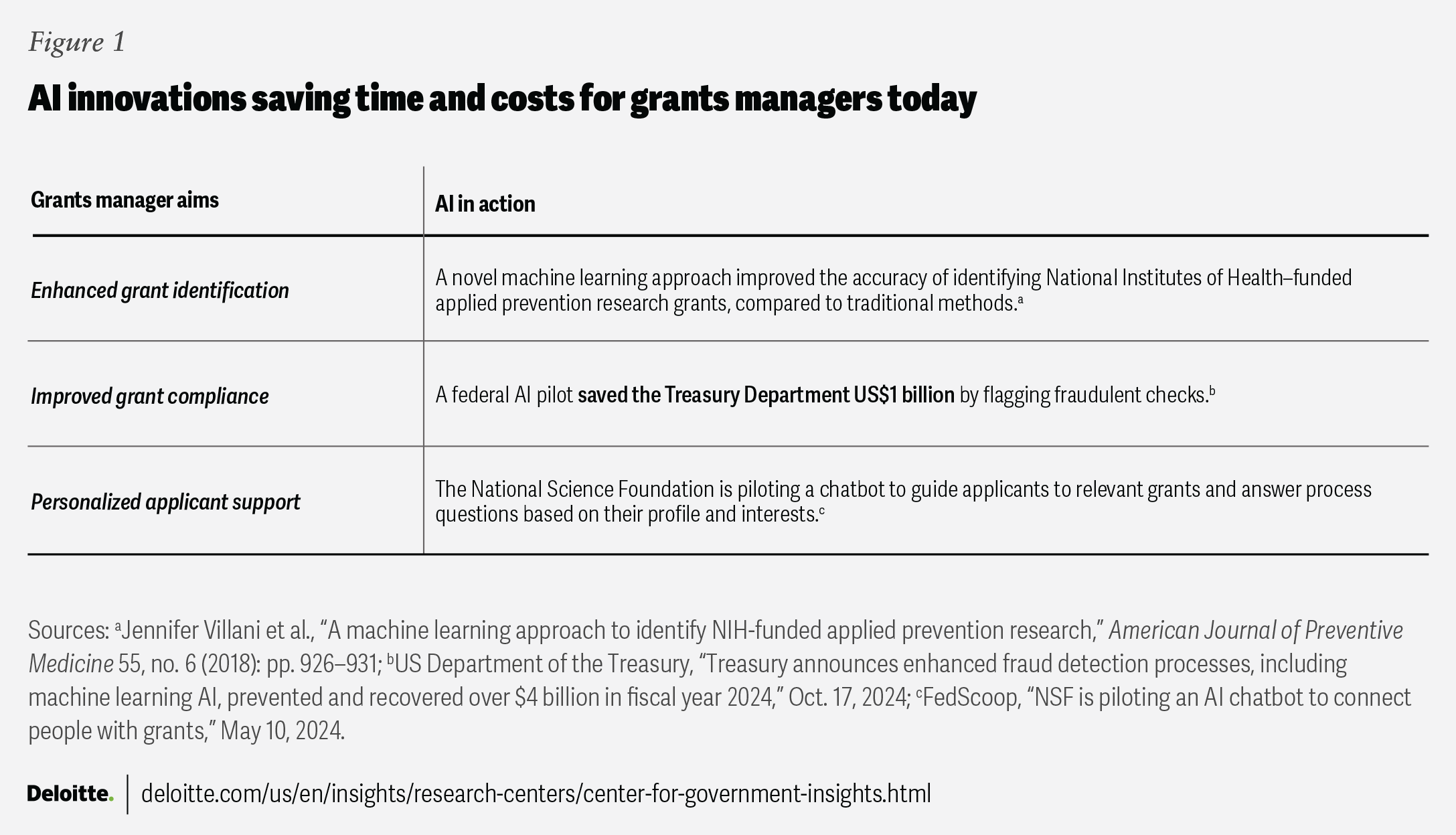

Government grants managers generally facilitate federal research and innovation and support programs. But with data to process and stakeholders to consult, award selection and management can become burdensome. That’s where grants managers can bring in AI as a game-changer, using it to strategically allocate attention and resources, help triage grant application reviews, improve efficiency of grant compliance and risk monitoring, and personalize applicant support (figure 1).

Grants managers in federal health agencies are already beginning to harness the power of AI to boost performance. The National Institutes of Health (NIH) introduced an automated, AI-driven referral tool in 2022, designed to aid in the assignment of review applications. Leveraging data from previous review cycles, the tool streamlines the process and eliminates manual work by identifying duplicate submissions and directing applications to the appropriate review branches.8

What is possible with AI at scale?

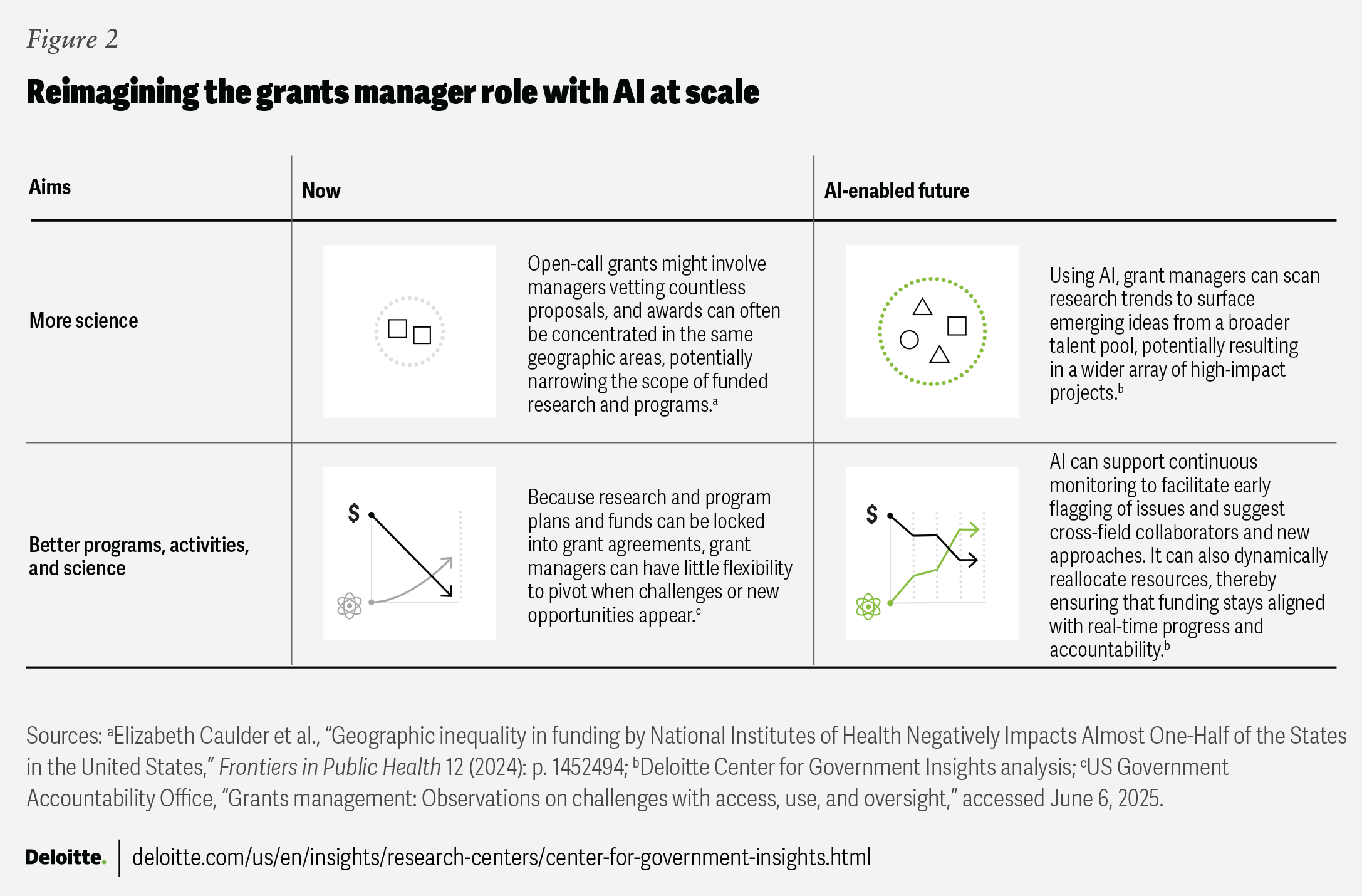

These innovations mark progress yet represent only the beginning of AI’s transformative potential. At scale, AI can support efforts to reinforce grants managers as strategic leaders of scientific progress and innovative government programs and activities. Empowered by AI, grants managers can better deliver proactive technical assistance and continuous quality improvement, and enable real-time identification of fraud, waste, and abuse to improve outcomes for people. Grants managers can also use AI to facilitate adaptive grants management, where grants flexibly evolve in real time as new data emerges or breakthroughs occur.

In this reimagined landscape, grants managers can reshape the way grants deliver meaningful change— achieving more science and even better science, programs, and activities while maximizing resource efficiency (figure 2).

2. The government scientist

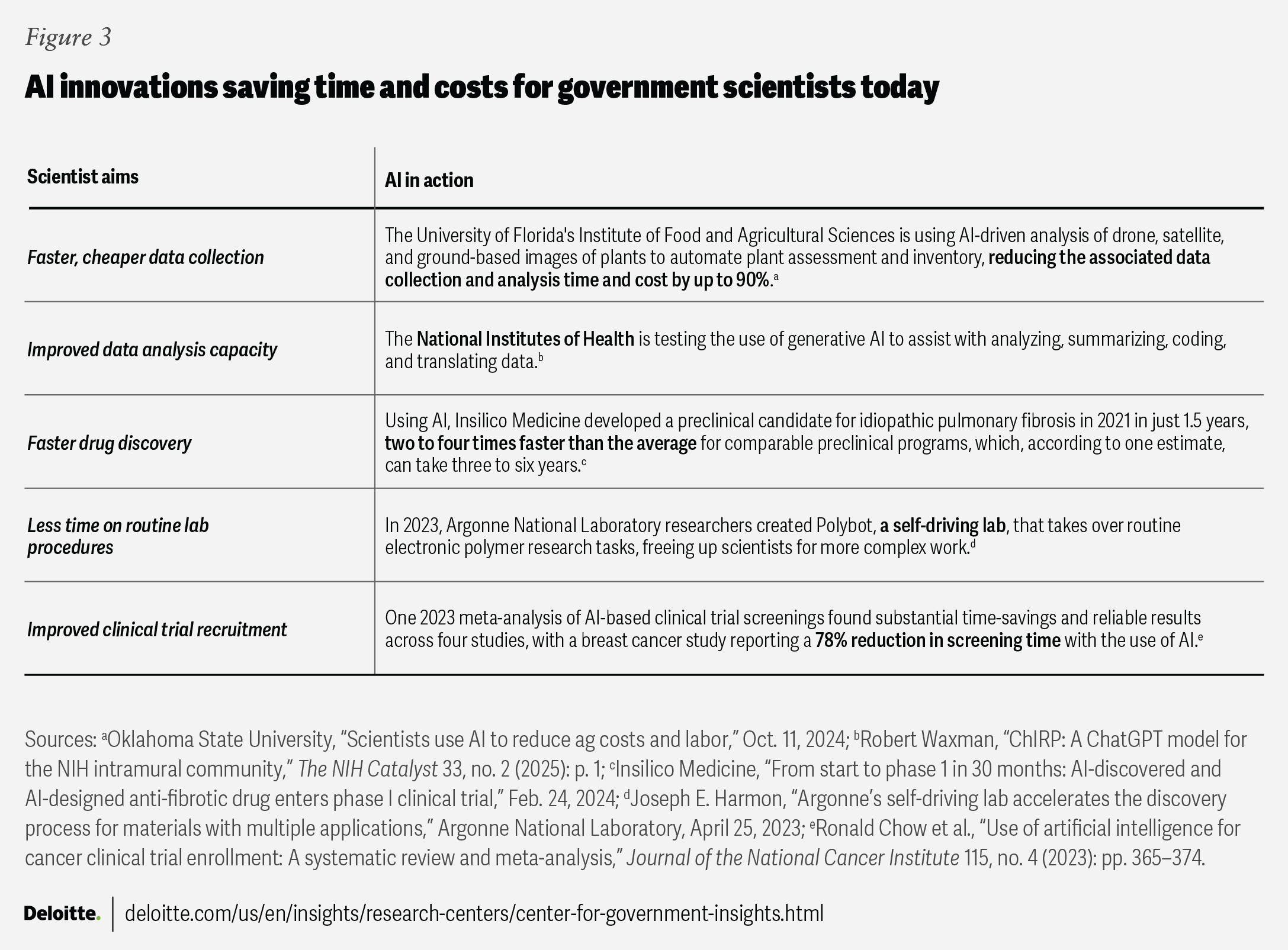

Whether it’s developing next-generation diagnostics, testing new therapies, or evaluating drug safety, government health scientists generate new knowledge and evidence that can protect and improve the nation’s health. Scientists can augment roles by streamlining workflows, improving the speed of data interpretation, and enabling greater collaboration, with the help of AI. This should allow them to focus on innovative thinking and hypothesis generation rather than computation. There is also potential in drug discovery, mining massive data sets to identify hard-to-find targets,9 and reducing the time and costs of clinical trials through the use of AI.10 One life science breakthrough used a gen AI model to help solve a protein-folding problem that plagued scientists for half a century.11 The researchers were recognized with the 2024 Nobel Prize in Chemistry.12

Federal health leaders are already leveraging AI to facilitate the scientific process. The NIH’s TrialGPT tool helps improve the match of patients to clinical trials.13 The Food and Drug Administration is developing an AI-based computer assessment tool to improve the accuracy and consistency of drug labeling.14 An NIH study developed an AI tool aiming to match patients to cancer drugs by analyzing molecular characteristics of tumors and predicting which treatments might be most effective.15 More and more, AI innovations are augmenting federal health-led scientific research (figure 3).

What is possible with AI at scale?

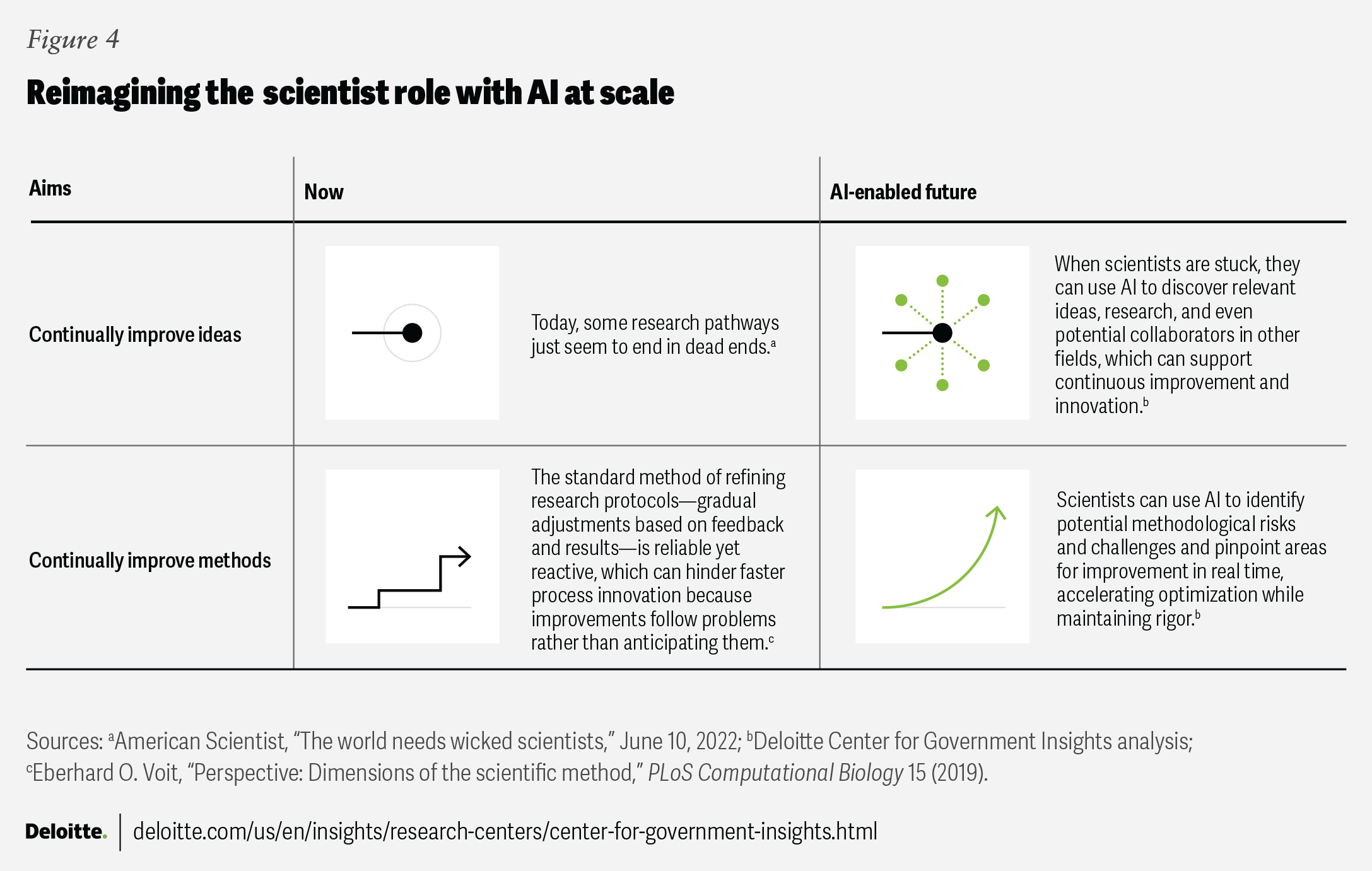

Scientists do hit roadblocks—encountering unexpected data patterns, combing through massive data sets, or hitting dead ends pursuing answers to complicated problems. During those times, scientists can use AI at scale to propose alternative protocols, identify overlooked data, or highlight new variables to test. Advanced analytics have the potential to integrate cross-disciplinary data, approaches, and insights, sparking novel experimental angles and breakthrough discoveries. At scale, future scientists can use AI to continually improve both ideas and methods, fueling new pathways to innovation (figure 4).

3. The human resources coordinator

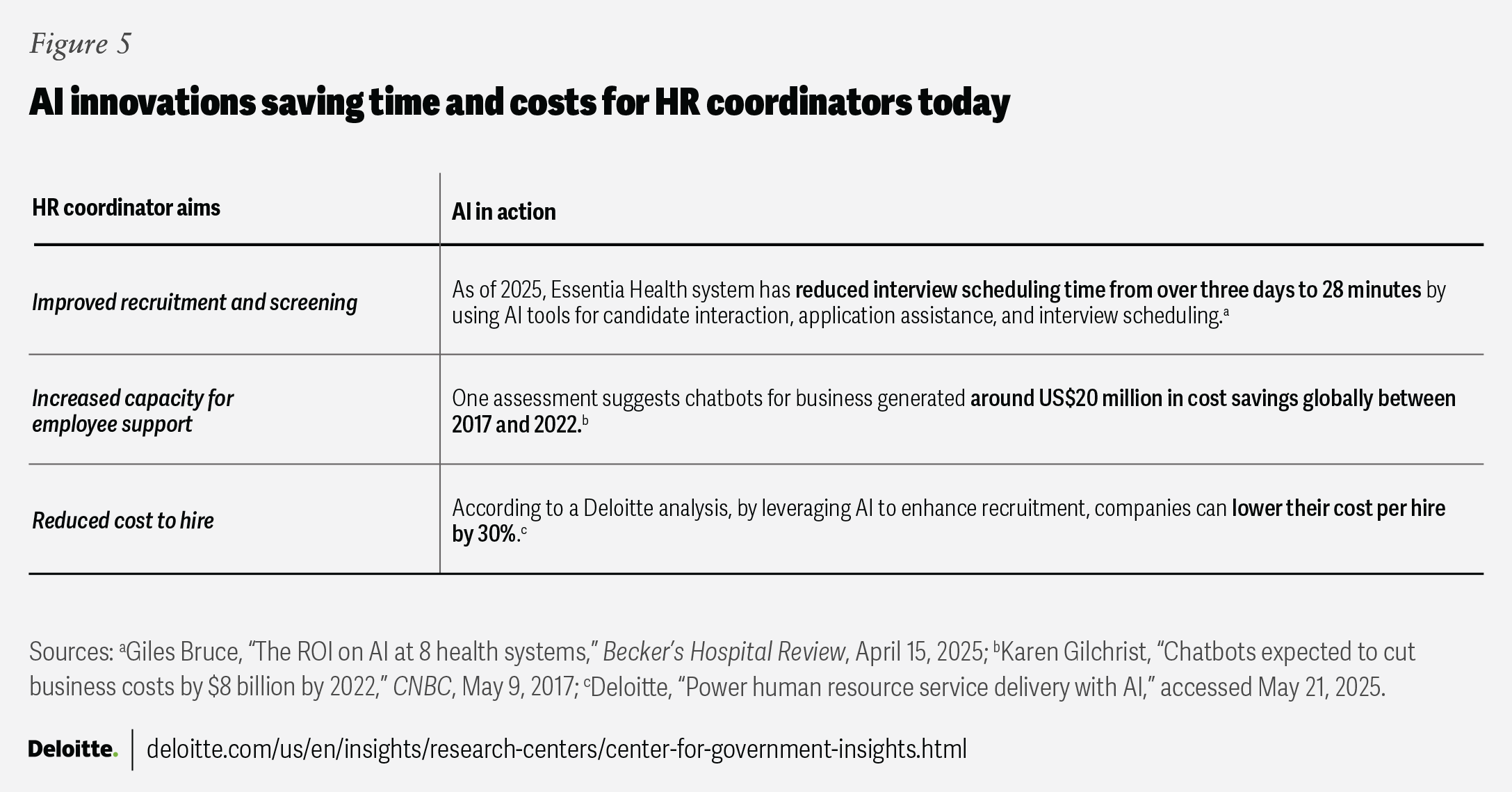

AI can support the optimization of human resources coordination tasks, such as explaining benefits and analyzing employee profiles to trace skill gaps. Federal health agencies are already taking decisive steps to incorporate AI tools into workforce planning and management. For instance, the Department of Health and Human Services (HHS) has already developed standard AI position descriptions and job analyses using the Office of Personnel Management’s (OPM) Direct Hire Authority (DHA) framework.16 AI tools are helping to transform HR functions, freeing up HR coordinators to focus more time on strategic workforce planning (figure 5).

What is possible with AI at scale?

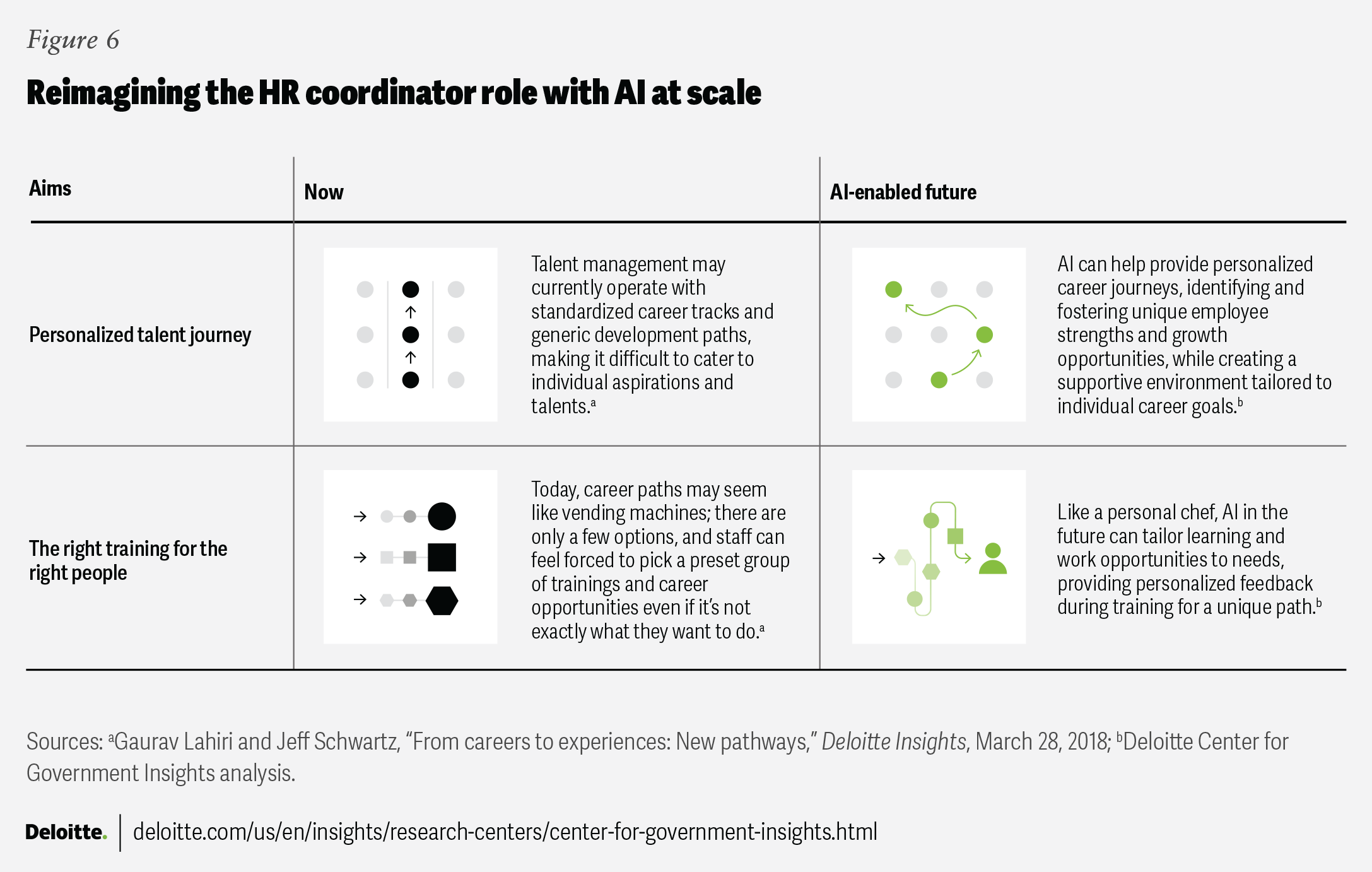

Among other aims, HR coordinators seek to improve employee engagement, optimize hiring processes, and connect the right people with the right training. AI at scale can not only help minimize administrative burdens and free up more time for strategic thinking, but it can also help HR coordinators create personalized employee talent journeys—from onboarding to exit—and design custom learning and development opportunities (figure 6).

4. The clinician

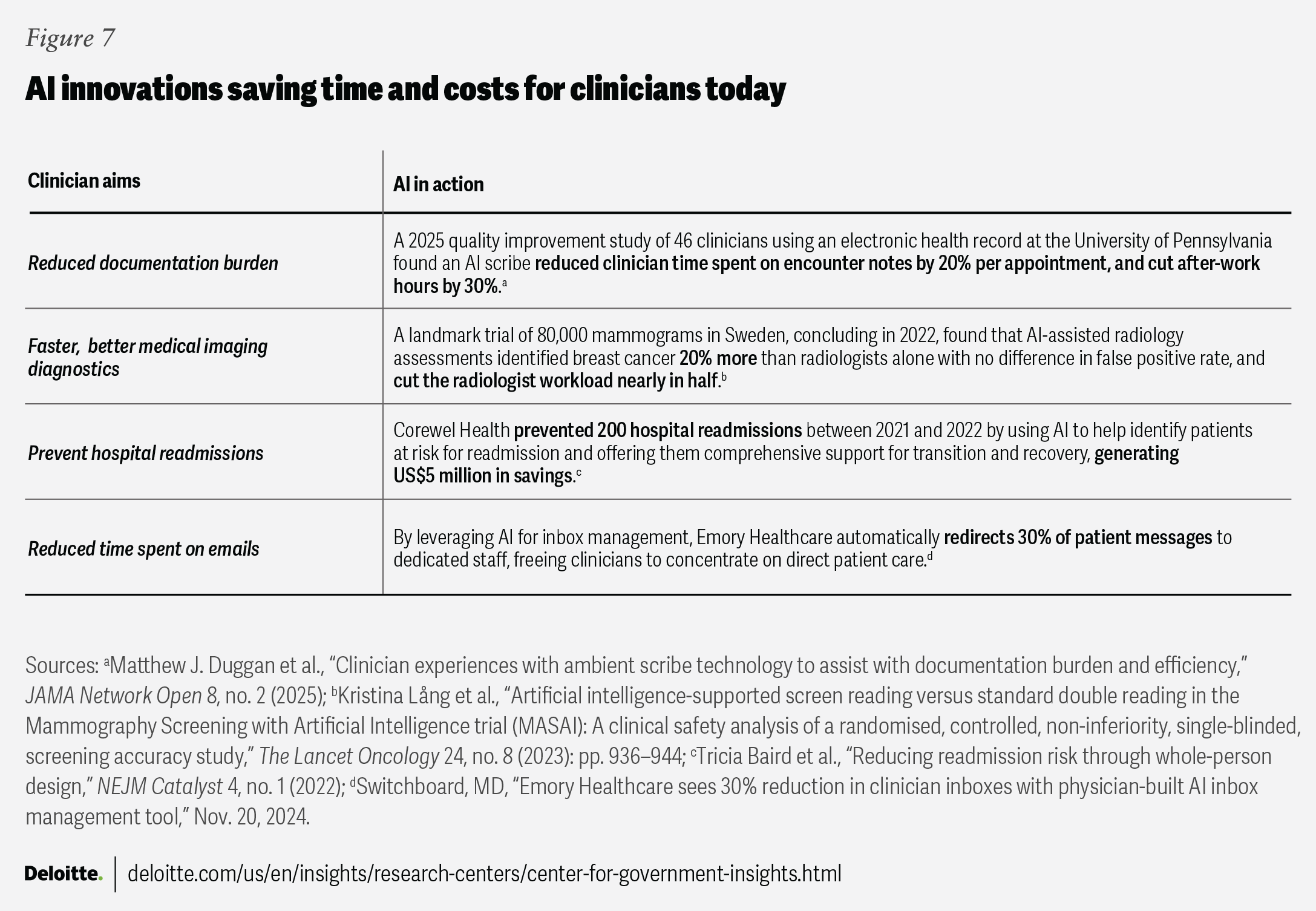

Clinicians across federal agencies tend to balance patient care, research, and regulatory responsibilities while navigating increasing administrative demands. The health care industry has already been saving time and reducing costs with the help of AI.17 Using AI, clinicians can reduce time spent on documentation, improve medical imaging, prevent hospital readmissions, and save time on emails (figure 7).

In December 2024, the Department of Veterans Affairs (VA) expanded its testing of Medtronic’s GI Genius system to help improve colorectal polyp detection during colonoscopies.18 The program is now installed in 140 VA facilities.19 Additionally, the Veterans Health Administration (VHA) is piloting ambient scribe technology to help reduce physician burnout by recording patient visits and automatically generating visit summaries for clinician review.20 Approved notes will be directly incorporated into electronic patient health records, which is expected to reduce documentation time.21 VHA is also exploring gen AI applications to assist with drafting emails, summarizing policy documents, and analyzing survey data, aiming to streamline tasks across the department.22

What is possible with AI at scale?

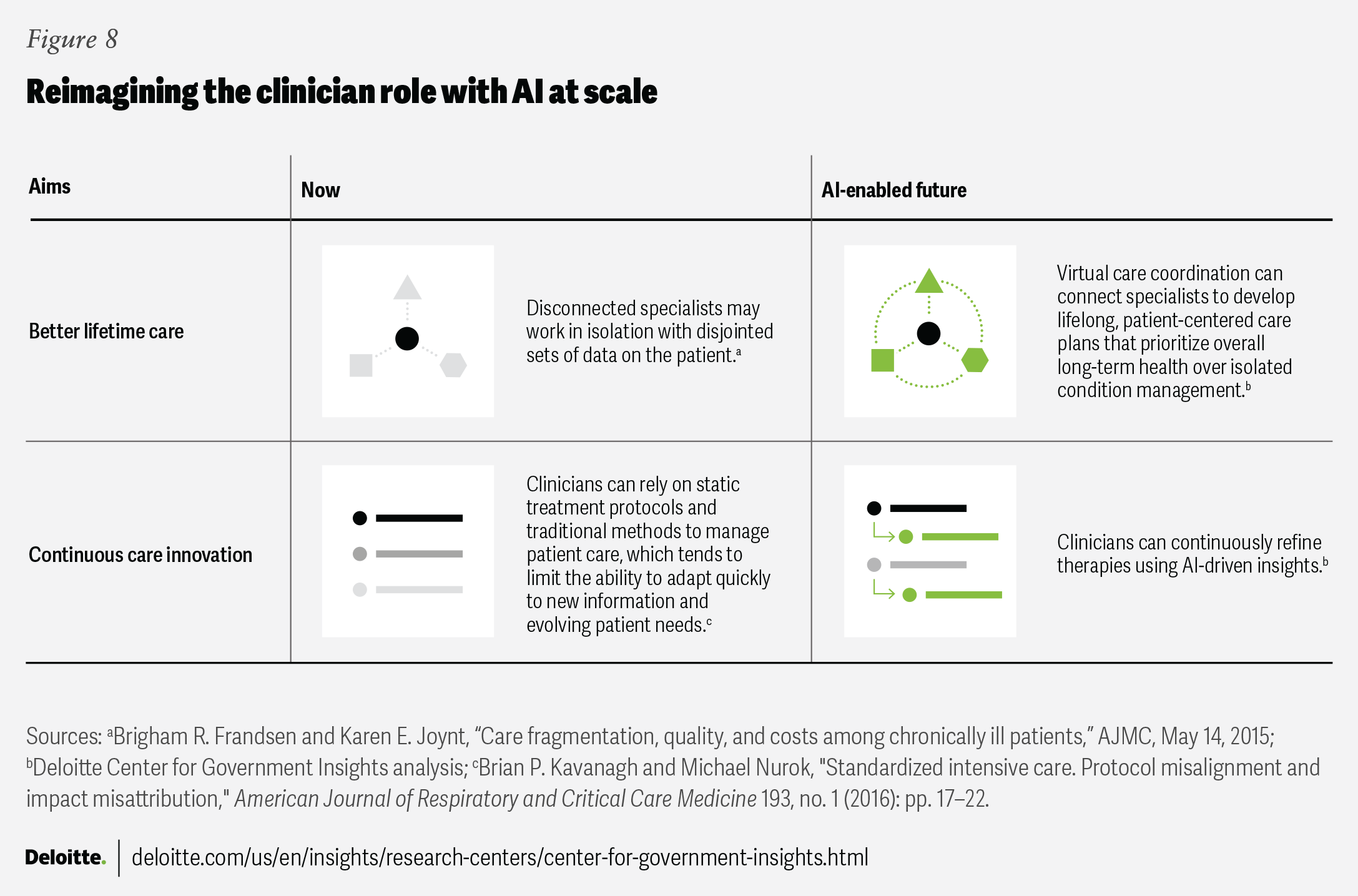

AI is already helping to transform patient care by streamlining clinician workflows, enhancing diagnostic accuracy, and reducing documentation burdens. And its potential at scale goes even further. Advanced, real-time decision support systems could provide clinicians with more tailored insights—from early diagnostic signals to personalized treatment recommendations—while preserving the clinician’s essential role in patient care. To improve the success of AI implementations at scale, agencies can include clinicians at the center of the process.23

In this vision, clinicians can use AI tools to aggregate and analyze patient data. This can help surface potential issues, optimize resource allocation, and improve care coordination in busy clinical environments. Supported by AI, clinicians of the future could achieve better lifetime care and continuous care innovation (figure 8).

Realizing the future of AI in federal health: Five capabilities for scale

These four personas—grants manager, scientist, HR coordinator, and clinician—point toward a future in which AI has the potential to dynamically shift how federal health work is done.24 But how can an agency scale a system designed to serve thousands or millions of people while safeguarding the population? We propose rethinking the fundamentals of readiness through five capabilities for scale: data, ethics, infrastructure, people, and leadership.

Data, data, and more data

AI models are built on data, but in federal health agencies, data fragmentation, inconsistency, and insufficient information, make it difficult to train reliable models.25 Health data is scattered across electronic health records, insurance claims, and research databases,26 often in incompatible formats and with variable definitions. Even within agencies, legacy systems can complicate seamless data integration.27

Data challenges in health care have been persistent28 and can raise concerns, since AI trained on incomplete or outdated data can lead to misdiagnoses, resource misallocation, or ineffective policy decisions.29 Federal agencies recognize this issue and are taking steps to improve data quality. Initiatives such as the Department of Health and Human Services’ Health Data Initiative30 aim to create more standardized and interoperable health data ecosystems. But the health data landscape remains complex, and it can be challenging to optimize AI utilization at scale. To help improve federal health data readiness for scaling AI, agencies can:

- Prioritize targeted data improvement efforts. Instead of looking for a comprehensive, centralized data overhaul to become available, agencies can focus on incremental, high-impact fixes such as assembling a cross-agency task force to define priority data sets for AI applications. This is akin to the Testing Risks of AI for National Security Taskforce, which brings together federal agencies to implement safe and secure AI innovation.31 Agencies can also mandate real-time data quality checks that flag inconsistencies such as mislabeled and ambiguous data.32

- Consider federated AI to work around data fragmentation. Since health data may not be easily or safely centralized, agencies could invest in federated AI, which allows models to learn from distributed data sets without moving or exposing patient data.33 This tends to reduce privacy risks and eliminate the need for data-sharing agreements. Some pilot programs—such as the National Institutes of Health’s federated learning initiatives in cancer research—are already testing this approach.34 Scaling these efforts could accelerate AI adoption without waiting for perfect data integration.

Build ethical guardrails to help manage risks

While AI offers potential to improve the nation’s health and streamline federal health operations, it can introduce risks, including skewed decision-making, data security vulnerabilities, and a lack of transparency.35 In a recent forum, AI leaders across government and industry were torn between trial and error and structured oversight-based approaches to governance.36 In 2024, Deloitte conducted a survey of 2,770 global business leaders, in which only 23% of respondents rated their organizations as highly prepared in the areas of AI risk and governance.37 Without deliberate governance, these risks could undermine patient safety. To help manage risks, federal agencies can:

- Partner with end users in the AI system design process. When technology implementation reserves end-user input for late-stage testing, it risks failing to achieve goals. Clinicians, researchers, data scientists, and grant managers who rely on AI tools can play a central role in shaping them if those tools are to meet promises of improving efficiency and outcomes. Human-centered design can help agencies tailor AI strategies to meet real-world needs and can reduce risks.

- Incorporate trust into every stage of AI development. Agencies can look to build AI systems to be transparent, accountable, and aligned with ethical standards. Integrating trustworthy AI principles early can prevent risks from manifesting downstream and can scale AI solutions in a way that is both responsible and sustainable. In 2023, Veterans Affairs approved an agencywide trustworthy AI framework that served as the foundation for safe and secure AI implementations and compliance across the entire agency.38

- Strengthen privacy and security safeguards. AI systems handling sensitive health data must comply with the Health Insurance Portability and Accountability Act and the Federal Information Security Management Act. Agencies could implement zero-trust architectures to minimize unauthorized access and enhance cybersecurity. The Office of Management and Budget has already issued directives in this regard.39 Agencies could also establish AI ethics review boards to evaluate high-risk AI deployments in health care settings.

- Align AI regulation with rapid technological advancements. AI innovation is outpacing regulatory frameworks, creating potential gaps in oversight.40 While federal agencies are both AI adopters and regulators, agency leaders can proactively collaborate with policymakers to refine policies that balance innovation with safety. The procurement processes of AI tools could accommodate iterative development, testing, and real-world validation.

Infrastructure is the foundation

Without a strong infrastructure in place, agencies cannot implement a scalable AI model.41 Skipping out on it can lead to disjointed systems, inefficiencies, and security vulnerabilities. AI systems need to be built, tested, and deployed with reliability, security, and interoperability in mind. To help build durable infrastructure for scale, federal health agencies can:

- Invest in centralized AI marketplaces to standardize and scale. Rather than building AI tools in silos, agencies can establish centralized AI marketplaces within respective agencies—platforms on which vetted, approved models can be shared across agencies. This tends to reduce duplication, standardize security and privacy checks, and accelerate AI deployment. Such platforms are already becoming a reality in government. The Department of Defense’s Chief Digital and Artificial Intelligence Office has created a marketplace for AI models and technology. It is intended to accelerate and streamline the acquisition and use of AI models and technologies.42

- Embed security and auditability into AI infrastructure. Security should not be an afterthought. AI infrastructure should have built-in safeguards, such as automated privacy checks incorporated into platforms to maintain compliance with regulations. Auditability features could track AI decisions and data usage for accountability.

- Build for future expansion. Infrastructure should support long-term AI advancements. A scalable system tends to demand interoperability and modular, adaptable AI platforms that can integrate with emerging technologies.

Workforce needs for scalable AI

Scaling AI in federal health agencies appears to be a workforce challenge as well as a technology challenge. AI tools can support decision-making, not replace human capabilities. For AI to be truly effective, agencies can create workforce strategies that provide appropriate training and oversight based on job roles. To help support a workforce for scalable AI, federal health agencies can:

- Tailor AI training for different roles. Not every federal health employee needs to be an AI expert, but it could benefit an organization to have all staff fluent in how to leverage AI for the job role. A successful workforce strategy may feature training tailored to specific job roles and requirements.

- Embed AI literacy at every level. AI literacy can go beyond technical skills. Agencies can build training programs that teach critical evaluation of AI outputs, so staff understand models’ uncertainty and verification methods. The Department of Health and Human Services’ planned AI Corps program aims to position AI experts in each of the department’s agencies to bolster expertise.43

- Build a culture of confidence, not blind trust. A well-trained workforce shouldn’t blindly trust AI tools or results—it should question and work to refine the technology. In this sense, it is important for agencies to consider cultivating a culture in which AI is seen as a decision-support tool, not a decision-maker, and in which employees feel empowered to escalate concerns. AI deployments can and should prioritize transparency and explicability.

Leadership: Align vision with action

Effectively scaling AI involves leaders thinking beyond the technology and focusing on how it aligns with agency missions. Initiatives can directly support agency goals, such as improving health outcomes, enhancing service delivery, or advancing scientific research. Leaders who view AI as a strategic asset to achieve specific mission objectives—not as an end in itself—could be better positioned to drive meaningful, long-term impact. Strong leadership is an important factor involved in long-term transformation—to unlock AI’s full potential, leaders should:

- Set a clear vision for how AI integrates into the agency’s strategy. Articulating measurable goals and communicating this vision across all organizational levels tends to foster clarity, collaboration, and commitment among stakeholders. Hiring chief AI officers in various federal agencies can help embed AI into operations.44

- Track progress and measure mission impact. Dashboards can translate complex metrics into actionable insights, enabling leaders to assess which AI tools are most effective in delivering mission objectives. By evaluating tools against predefined metrics for mission impact—such as advancing the quality of patient care or boosting operational performance—leaders can prioritize scaling initiatives that help provide the greatest value.

- Evaluate costs dynamically. AI tools can provide leaders with real-time insights into resource usage patterns, enabling them to proactively adjust allocations and optimize spending as demand scales or fluctuates.

Agentic AI: The next leap in AI readiness

With these foundations in place, federal health organizations can not only reap the benefits of AI today but also be ready for the next big leap in AI itself. Forward-thinking government leaders are likely looking beyond using AI to automate single administrative tasks. The next step—and perhaps the future of AI at scale in federal health—is agentic AI.

AI agents are the conductors of the AI world. Where a single task could be automated by AI, AI agents can coordinate the work of several other automation tools and collaborate with human experts to remake entire workflows. This can allow federal health leaders to augment more complex workflows using AI. Federal health agencies may look to agentic AI to provide real-time answers to constituents, personalize employee benefits planning, and propose grant changes to improve outcomes based on live data.45 These AI agents can continuously scan transactions, flag anomalies, and check resource use for efficiency.

Deloitte analysis of federal Occupational Information Network data suggests that AI agents carry the potential to reshape grants management: In the Department of Health and Human Services alone, AI automation may be able to save more than six million hours—approximately 40% of the total hours that the federal grant application review process usually takes.46 AI agents may soon be able to aid data analysts, engineers, physicians, and psychologists, creating a more adaptive, holistic approach to federal health funding.47

AI readiness in federal health could mean preparing for a workforce that collaborates with AI agents across multiple roles in the future. Grants managers may be able to shift from paperwork to strategy, using agents to help inform better funding decisions. With AI agents, scientists can accelerate discoveries by processing vast data sets, identifying patterns, and even assisting in generating breakthrough innovations in vital research areas where science has been stuck for decades. HR coordinators can use agents to augment hiring, reducing time to hire and optimizing workforce planning. And clinicians can integrate AI agents into diagnostics and care coordination to get better insights on patient care and offer a more streamlined patient experience.48

The Advanced Research Projects Agency for Health has been exploring the agentic AI landscape and ways to integrate AI agents into federal health operations. 49 This could signal a broader shift toward autonomous decision-making systems that are expected to enhance efficiency, accuracy, and patient outcomes.50

Federal health agencies can lead the change today

AI at scale has the power to support federal health agencies in combating health challenges facing Americans today. Federal health agencies are already reaping the benefits of AI by accelerating review time for new therapies, advancing scientific discovery, and cutting time on administrative tasks like drafting emails and summarizing documents.51

As AI appears poised to transform health nationwide, a key challenge for agencies will be successfully moving from experimentation to scaled deployment. Scaling AI means more than adopting new tools. It involves removing obstacles to innovation and laying the groundwork with solid infrastructure, which agencies can build on, powering resource efficiency across the health system.

The pace of AI adoption in government appears to be accelerating, and federal health agencies have a chance to lead this transformation. By taking deliberate steps, federal health agencies can confidently scale AI in ways that push the boundaries of innovation and advance human flourishing, both now and in the years to come.