Loading...

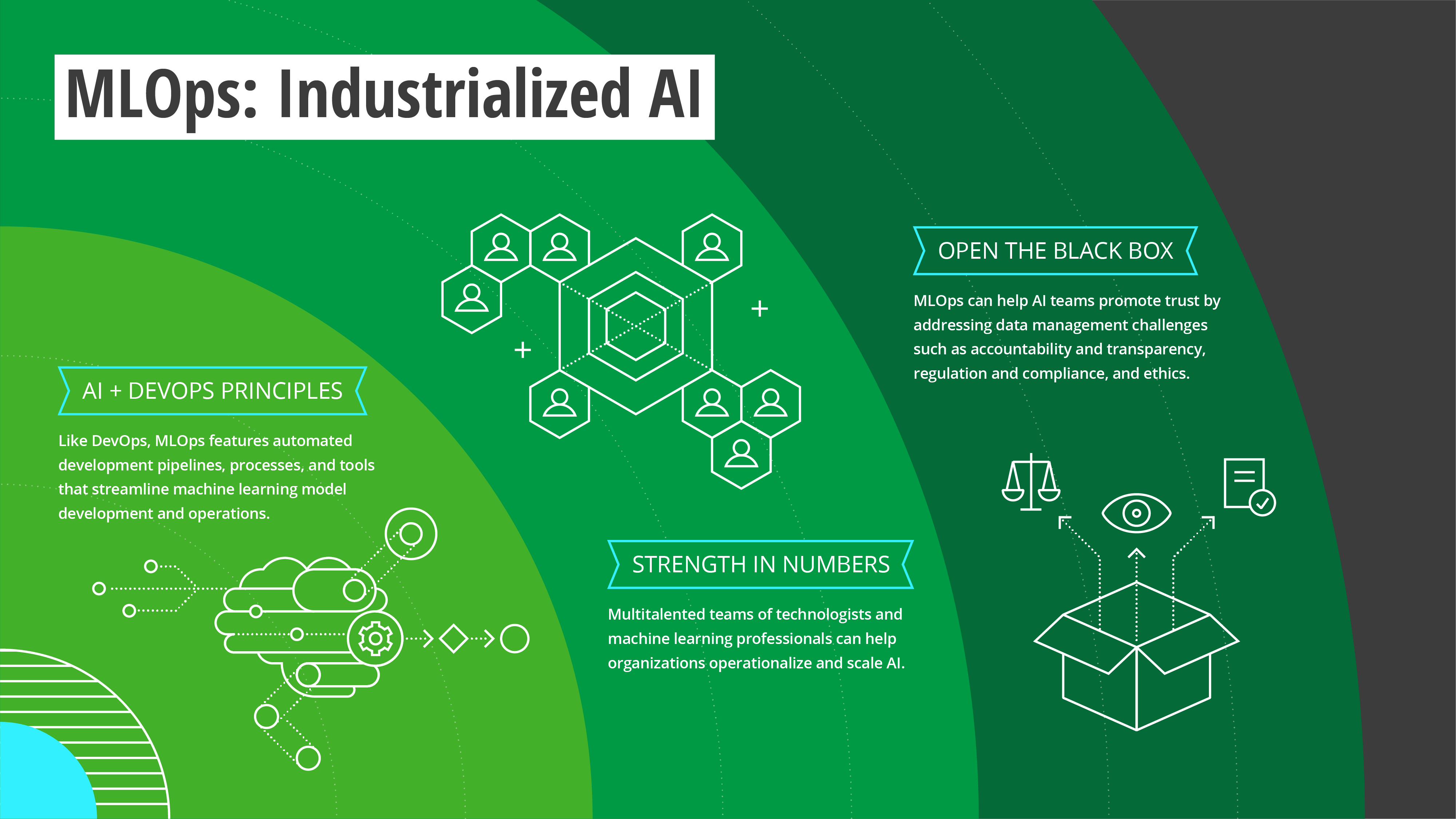

Industrialized AI

and operations with a dose

of engineering and

operational discipline

Industrialized AI

and operations with a dose

of engineering and

operational discipline

Lessons from the front lines

Read insights from thought leaders and success stories from leading organizations.

The next wave of AI research

Researchers at the National Oceanic and Atmospheric Administration (NOAA) are increasingly leveraging AI and machine learning to better understand the environment and make potentially life-saving predictions.The next wave of AI research

Researchers at the National Oceanic and Atmospheric Administration (NOAA) are increasingly leveraging AI and machine learning to better understand the environment and make potentially life-saving predictions. With an extensive network of environmental

satellites and observation systems that collect real-time weather, climate, and ocean data, the federal agency currently uses AI to interpret earth, ocean, and atmospheric observations, improve weather forecasting,

monitor marine mammal and fish populations, and aid many other applications.

As NOAA seeks to expand its use of AI and ML to every mission area, it

recently launched an effort to improve the efficiency and coordination of AI development and use across the agency. Historically, NOAA scientists have undertaken AI initiatives and machine learning models

independently, with every researcher potentially having a different idea about how to leverage AI for a specific project; development happens organically. As a result, line offices, made up of multiple research

centers and divisions, are each at a different stage of maturity in the AI journey.

“To create truly transformational products, we need a more consistent, synchronized approach to AI across the agency,”

says Sid-Ahmed Boukabara, principal scientist for strategic initiatives at NOAA’s Center for Satellite Applications and Research, the research arm of the National Environmental Satellite, Data, and Information

Service.

9 “We aim to dramatically expand the application of AI in every NOAA mission area by improving the efficiency,

effectiveness, and coordination of AI development and usage across the agency.”

NOAA developed a bold strategy focused on achieving five strategic goals.10 One of those entails the establishment of a virtual AI center, allowing line offices to share best practices and integrate efforts when appropriate. The NOAA AI Center was proposed in the latest presidential

budget request and is being discussed on Capitol Hill.

Regardless of where a line office, division, or center sits on the maturity curve, the NOAA AI Center is envisioned to work with those scientists

and researchers to help them effectively transition AI projects from idea to operations. Initially, the agency aims to increase the use of small-scale demonstration projects related to specific areas such

as weather forecasting, which can serve as proofs of concept for larger-scale efforts. Another objective of NOAA’s AI strategy has been to strengthen and expand partnerships in order to enhance the use of

AI to achieve the NOAA mission.11

In addition to partnerships and coordinating AI research, the NOAA AI Center is expected

to be responsible for making NOAA’s data AI-ready and available to the agency and public, promoting ML algorithm development, AI labeling, application development, information exchange, and general AI awareness

generation and workforce training. Technical specialists from the NOAA AI Center, embedded in the line offices, will provide researchers with the know-how, tools, and support to execute their ideas. “We’ll

make sure to not stifle scientists’ creativity and instead help them conserve resources and enhance their use of AI when needed,” Boukabara says. “By cross-fertilizing knowledge across the agency, we’ll

be able to benefit all line offices by efficiently leveraging the newest machine learning techniques when scientifically appropriate.”

Scaling to thousands of models in financial services

AI and machine learning technologies are helping financial services firm Morgan Stanley use decades of data to supplement human insight with accurate models for fraud detection and prevention, sales and marketing automation, and personalized wealth management, among others.Scaling to thousands of models in financial services

AI and machine learning technologies are helping financial services firm Morgan Stanley use decades of data to supplement human insight with accurate models for fraud detection and prevention, sales and marketing automation, and personalized wealth management,

among others. With an AI practice that’s poised to grow, the firm is leveraging MLOps principles to scale AI and ML.12

“We need to be able to scale from hundreds of models to thousands,” says Shailesh Gavankar, who heads the analytics and machine learning practice in Morgan Stanley’s Wealth Management Technology department.

“There are limitations to doing everything manually as long as data scientists and data analysts are working on their own ‘island’ without the ability to collaborate or share data.”

Currently, the

practice is using common platforms for managing data and developing, deploying, and monitoring ML models. To build and test models, people created a sandbox with access to a centralized data lake that contains

a copy of the data used in the production system, a technique that makes it easier to bring models from development into production.

In the development environment, data scientists, business analysts,

and data engineers across the practice can access the same standardized data in near-real time, enabling them to efficiently and collaboratively explore, prototype, build, test, and deliver ML models. Advanced

techniques mask personally identifiable information so the teams can generate insights without exposing sensitive data. “Across our AI practice, processes are built around data accuracy and privacy,” Gavankar

says. “Applying the highest standards to the training system ensures that we meet data compliance and regulatory requirements.”

For good model governance, transparency, and accountability, an independent,

in-house model risk management team was established. With years of experience deploying trading models, the team is responsible for assessing risk and validating the quality of ML models before they go to

production. The team evaluates the accuracy of the models and works to identify sources of bias or other unintended consequences. They also review data lineage as well as plans for production monitoring

and intervention should the model start to drift.

As its AI practice evolves, Morgan Stanley Wealth Management will be focusing on continuing to improve speed to market by further automating the

model risk management process and integrating the sandbox and production systems. “As MLOps tools and processes enable us to operationalize models more efficiently,” Gavankar says, “we can continue to increase

the number of models in production and more fully leverage AI’s ability to drive better business decisions.”

One-stop shop for model development and deployment

As AI and machine learning transform health care, health insurer Anthem, an industry leader in the use of clinical, customer-facing AI applications, is increasingly leveraging AI to reimagine and reinvent critical back-end business processes.One-stop shop for model development and deployment

As AI and machine learning transform health care, health insurer Anthem, an industry leader in the use of clinical, customer-facing AI applications, is increasingly leveraging AI to reimagine and reinvent critical back-end business processes. About two

years ago, the company embarked on an AI-supported journey to streamline claims management. As part of that process, leaders built a platform that consolidates model development and deployment across the

enterprise.

Anthem initially built several ML models that revealed patterns in claims data, made predictions to speed processing, and identified and corrected errors. The models were successful—and leaders

realized they needed to scale. “As the models began to deliver business value, we realized we needed infrastructure that could help us develop and operationalize machine learning more efficiently,” says

Harsha Arcot, senior director of enterprise data science.13 “To address this challenge, we decided to build a single interface

for all AI and ML solutions across the Anthem ecosystem.”

The company built an integrated development environment and an end-to-end platform that serves as a one-stop shop where developers and data

scientists prepare and store training data, build and validate models via easy-to-use interfaces, and deploy them at scale. A feedback mechanism allows models to continuously learn and improve while a separate

platform monitors the performance of production models.

Simultaneously, the company has been working on an initiative that consolidates data from seven systems into a single repository. With most

of that work complete, the process of finding the data to build, train, and operationalize models is much more efficient.

The platform also provides Anthem with the flexibility to duplicate models

for multiple use cases. For example, if a pipeline is already built out into the legacy claims system for a commercial use case, it can also be easily deployed on the consumer side. “It’s much more efficient

than when we used to develop a model for each use case from scratch,” Arcot says.

Using the platform, Anthem data scientists have developed a number of models, including those that fast-track the

processing of pre-approval claims, identify and automatically reject duplicate claims, and determine whether a medical procedure needs preauthorization. Previously, a human claims examiner or clinician needed

to manually review and process all of these claims.

Arcot says the platform has dramatically increased model deployment speed. “Before we developed the platform, it took about six months to deploy

very simple models,” he says. “Now we are able to develop much more complex initiatives in half the time.”

Lessons from the front lines

Read insights from thought leaders and success stories from leading organizations.

The next wave of AI research

Researchers at the National Oceanic and Atmospheric Administration (NOAA) are increasingly leveraging AI and machine learning to better understand the environment and make potentially life-saving predictions.The next wave of AI research

Researchers at the National Oceanic and Atmospheric Administration (NOAA) are increasingly leveraging AI and machine learning to better understand the environment and make potentially life-saving predictions. With an extensive network of environmental

satellites and observation systems that collect real-time weather, climate, and ocean data, the federal agency currently uses AI to interpret earth, ocean, and atmospheric observations, improve weather forecasting,

monitor marine mammal and fish populations, and aid many other applications.

As NOAA seeks to expand its use of AI and ML to every mission area, it

recently launched an effort to improve the efficiency and coordination of AI development and use across the agency. Historically, NOAA scientists have undertaken AI initiatives and machine learning models

independently, with every researcher potentially having a different idea about how to leverage AI for a specific project; development happens organically. As a result, line offices, made up of multiple research

centers and divisions, are each at a different stage of maturity in the AI journey.

“To create truly transformational products, we need a more consistent, synchronized approach to AI across the agency,”

says Sid-Ahmed Boukabara, principal scientist for strategic initiatives at NOAA’s Center for Satellite Applications and Research, the research arm of NESDIS.9 “We aim to dramatically expand the application of AI in every NOAA mission area by improving the efficiency, effectiveness, and coordination of AI development

and usage across the agency.”

NOAA developed a bold strategy focused on achieving five strategic goals.10 One of those

entails the establishment of a virtual AI center, allowing line offices to share best practices and integrate efforts when appropriate. The NOAA AI Center was proposed in the latest presidential budget request

and is being discussed on Capitol Hill.

Regardless of where a line office, division, or center sits on the maturity curve, the NOAA AI Center is envisioned to work with those scientists and researchers

to help them effectively transition AI projects from idea to operations. Initially, the agency aims to increase the use of small-scale demonstration projects related to specific areas such as weather forecasting,

which can serve as proofs of concept for larger-scale efforts. Another objective of NOAA’s AI strategy has been to strengthen and expand partnerships in order to enhance the use of AI to achieve the NOAA

mission.

11

In addition to partnerships and coordinating AI research, the NOAA AI Center is expected to be responsible for making

NOAA’s data AI-ready and available to the agency and public, promoting ML algorithm development, AI labeling, application development, information exchange, and general AI awareness generation and workforce

training. Technical specialists from the NOAA AI Center, embedded in the line offices, will provide researchers with the know-how, tools, and support to execute their ideas. “We’ll make sure to not stifle

scientists’ creativity and instead help them conserve resources and enhance their use of AI when needed,” Boukabara says. “By cross-fertilizing knowledge across the agency, we’ll be able to benefit all line

offices by efficiently leveraging the newest machine learning techniques when scientifically appropriate.”

Scaling to thousands of models in financial services

AI and machine learning technologies are helping financial services firm Morgan Stanley use decades of data to supplement human insight with accurate models for fraud detection and prevention, sales and marketing automation, and personalized wealth management, among others.Scaling to thousands of models in financial services

AI and machine learning technologies are helping financial services firm Morgan Stanley use decades of data to supplement human insight with accurate models for fraud detection and prevention, sales and marketing automation, and personalized wealth management,

among others. With an AI practice that’s poised to grow, the firm is leveraging MLOps principles to scale AI and ML.12

“We need to be able to scale from hundreds of models to thousands,” says Shailesh Gavankar, who heads the analytics and machine learning practice in Morgan Stanley’s Wealth Management Technology department.

“There are limitations to doing everything manually as long as data scientists and data analysts are working on their own ‘island’ without the ability to collaborate or share data.”

Currently, the

practice is using common platforms for managing data and developing, deploying, and monitoring ML models. To build and test models, people created a sandbox with access to a centralized data lake that contains

a copy of the data used in the production system, a technique that makes it easier to bring models from development into production.

In the development environment, data scientists, business analysts,

and data engineers across the practice can access the same standardized data in near-real time, enabling them to efficiently and collaboratively explore, prototype, build, test, and deliver ML models. Advanced

techniques mask personally identifiable information so the teams can generate insights without exposing sensitive data. “Across our AI practice, processes are built around data accuracy and privacy,” Gavankar

says. “Applying the highest standards to the training system ensures that we meet data compliance and regulatory requirements.”

For good model governance, transparency, and accountability, an independent,

in-house model risk management team was established. With years of experience deploying trading models, the team is responsible for assessing risk and validating the quality of ML models before they go to

production. The team evaluates the accuracy of the models and works to identify sources of bias or other unintended consequences. They also review data lineage as well as plans for production monitoring

and intervention should the model start to drift.

As its AI practice evolves, Morgan Stanley Wealth Management will be focusing on continuing to improve speed to market by further automating the

model risk management process and integrating the sandbox and production systems. “As MLOps tools and processes enable us to operationalize models more efficiently,” Gavankar says, “we can continue to increase

the number of models in production and more fully leverage AI’s ability to drive better business decisions.”

One-stop shop for model development and deployment

As AI and machine learning transform health care, health insurer Anthem, an industry leader in the use of clinical, customer-facing AI applications, is increasingly leveraging AI to reimagine and reinvent critical back-end business processes.One-stop shop for model development and deployment

As AI and machine learning transform health care, health insurer Anthem, an industry leader in the use of clinical, customer-facing AI applications, is increasingly leveraging AI to reimagine and reinvent critical back-end business processes. About two

years ago, the company embarked on an AI-supported journey to streamline claims management. As part of that process, leaders built a platform that consolidates model development and deployment across the

enterprise.

Anthem initially built several ML models that revealed patterns in claims data, made predictions to speed processing, and identified and corrected errors. The models were successful—and leaders

realized they needed to scale. “As the models began to deliver business value, we realized we needed infrastructure that could help us develop and operationalize machine learning more efficiently,” says

Harsha Arcot, senior director of enterprise data science.13 “To address this challenge, we decided to build a single interface

for all AI and ML solutions across the Anthem ecosystem.”

The company built an integrated development environment and an end-to-end platform that serves as a one-stop shop where developers and data

scientists prepare and store training data, build and validate models via easy-to-use interfaces, and deploy them at scale. A feedback mechanism allows models to continuously learn and improve while a separate

platform monitors the performance of production models.

Simultaneously, the company has been working on an initiative that consolidates data from seven systems into a single repository. With most

of that work complete, the process of finding the data to build, train, and operationalize models is much more efficient.

The platform also provides Anthem with the flexibility to duplicate models

for multiple use cases. For example, if a pipeline is already built out into the legacy claims system for a commercial use case, it can also be easily deployed on the consumer side. “It’s much more efficient

than when we used to develop a model for each use case from scratch,” Arcot says.

Using the platform, Anthem data scientists have developed a number of models, including those that fast-track the

processing of pre-approval claims, identify and automatically reject duplicate claims, and determine whether a medical procedure needs preauthorization. Previously, a human claims examiner or clinician needed

to manually review and process all of these claims.

Arcot says the platform has dramatically increased model deployment speed. “Before we developed the platform, it took about six months to deploy

very simple models,” he says. “Now we are able to develop much more complex initiatives in half the time.”

Learn more

Download the trend to explore more insights, including the “Executive perspectives” where we illuminate the strategy, finance, and risk implications of each trend, and find thought-provoking “Are you ready?” questions to navigate the future boldly. And check out these links for related content on this trend:

- Thriving in the era of pervasive AI: See how companies are managing and getting ahead of the rise in AI adoption.

- AI & cognitive technologies collection: Learn how cognitive technologies can augment manual tasks and decision-making.

- Leverage MLOps to scale AI/ML to the enterprise: Listen to a podcast on the ways MLOps can integrate AI/ML models into business processes.

Next Trend:

Senior contributors

Paul Phillips, James Ray, and Paulo Maurício

Endnotes